Extract and transform text

the way you need it

What is Manipulist?

Manipulist is the Text Manipulation Tool you need to edit digital text in seconds.

Load input text. select tools, apply them to transform text and export it.

Who should use it?

Any Office package, text editor, IDE user or online writer will benefit from Manipulist.

Use it along your favourite text software to speed up your text writing and edit bulk text in one go.

What can you do?

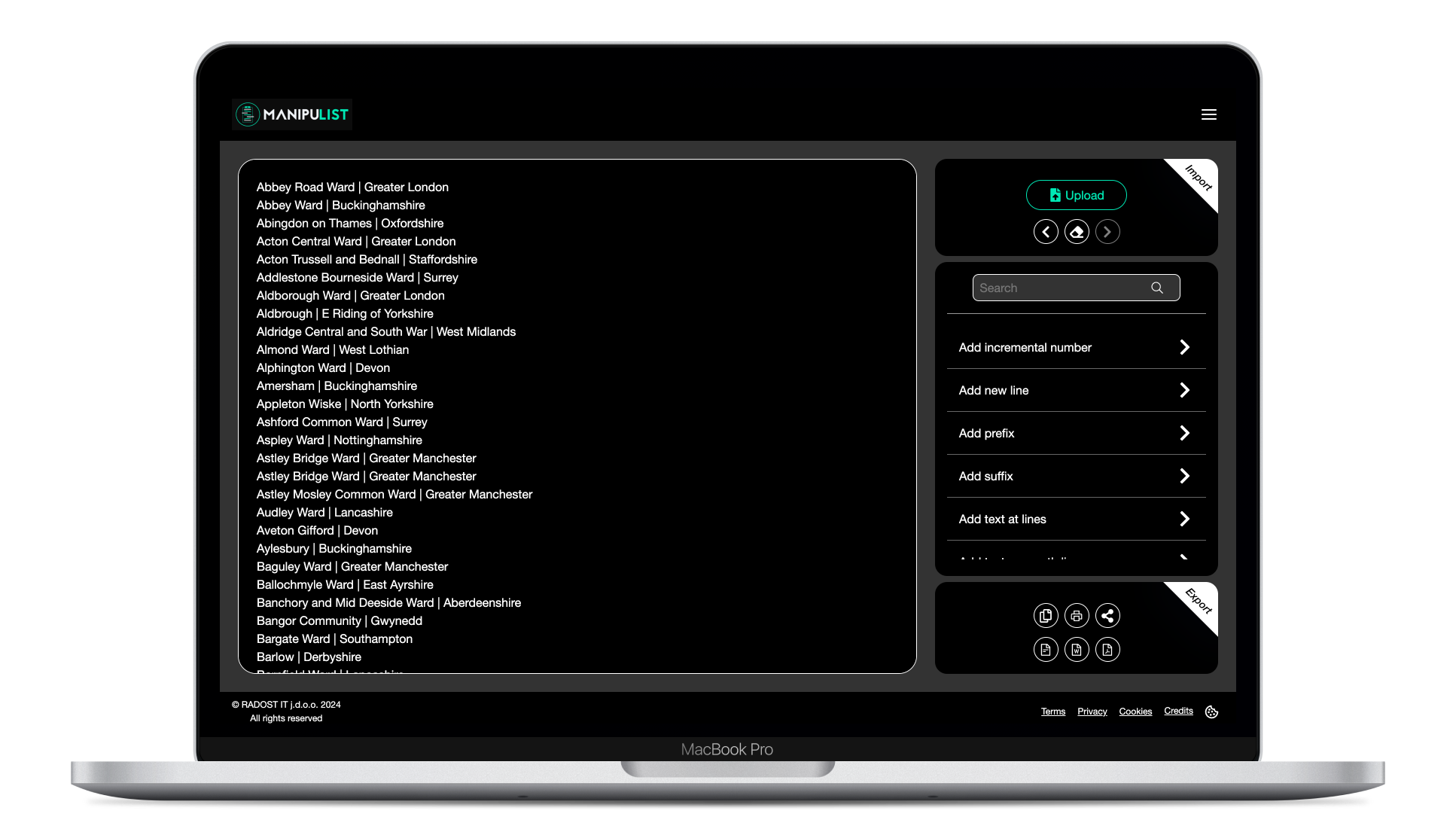

Import a paragraph or an entire text file you would like to edit.

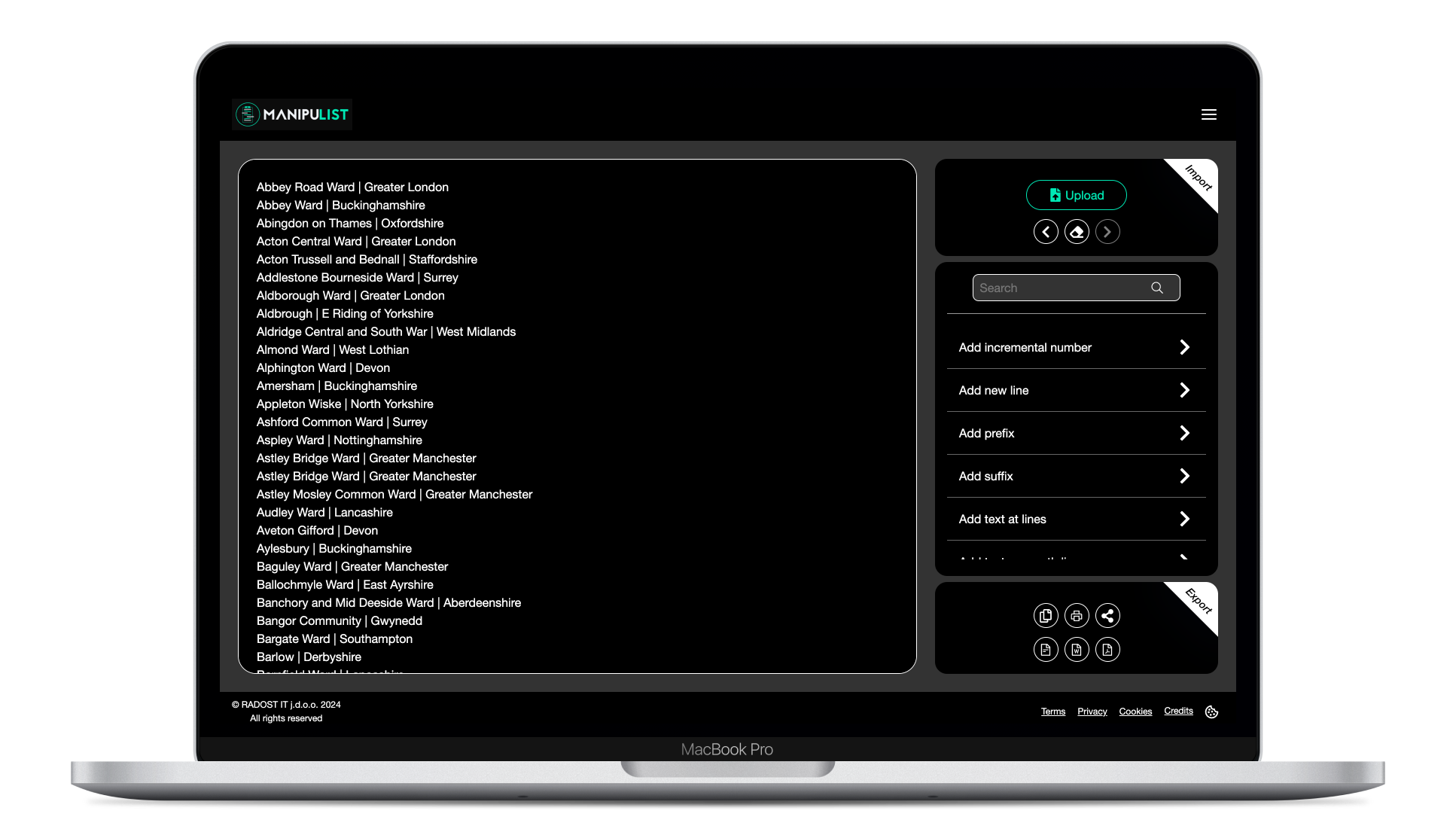

You can add text. Remove text. Sort lines.Extract text. Encode text. Replace text. Trim text. And more.

The PRO version gives you over 30 tools to choose from.

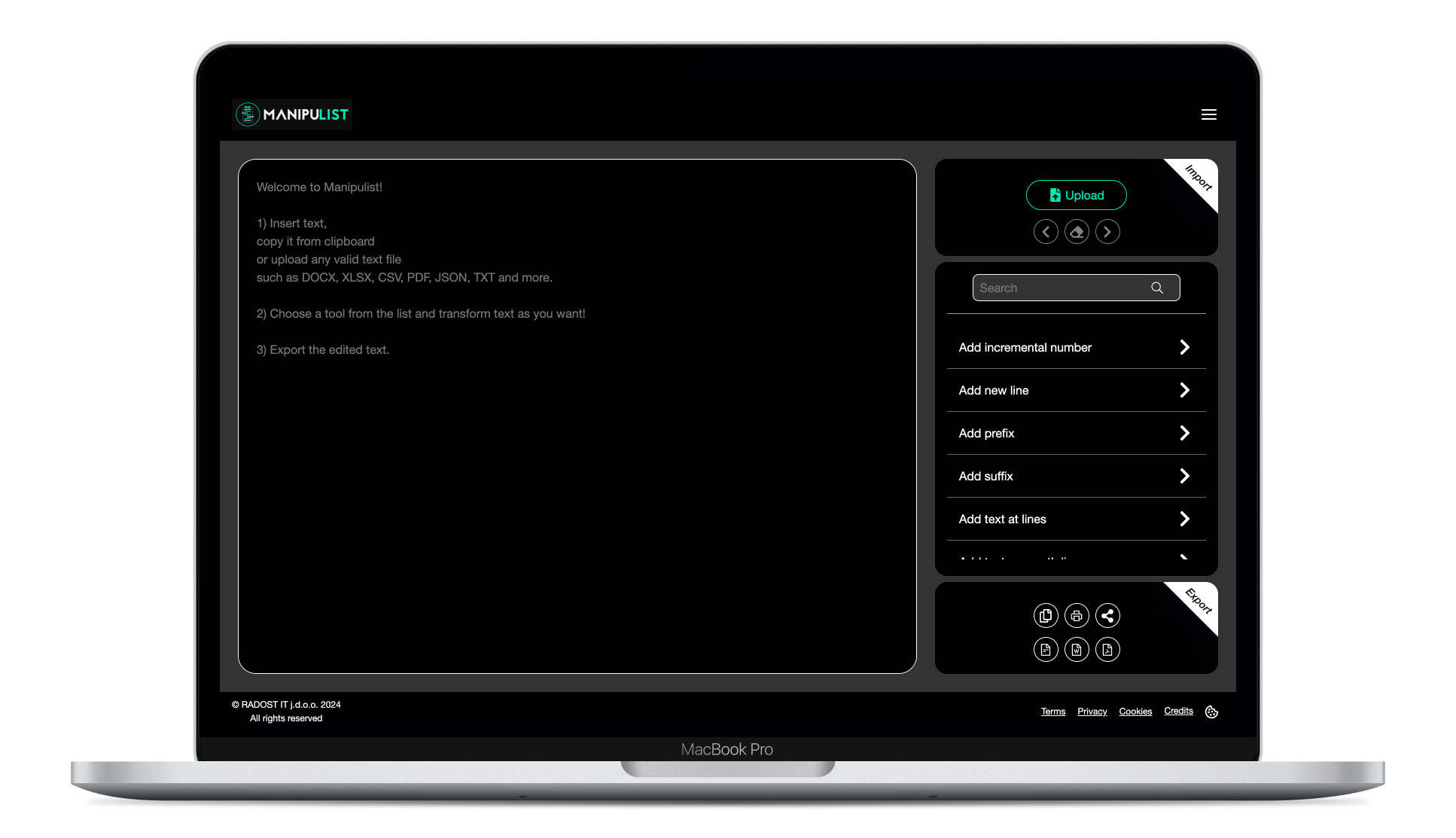

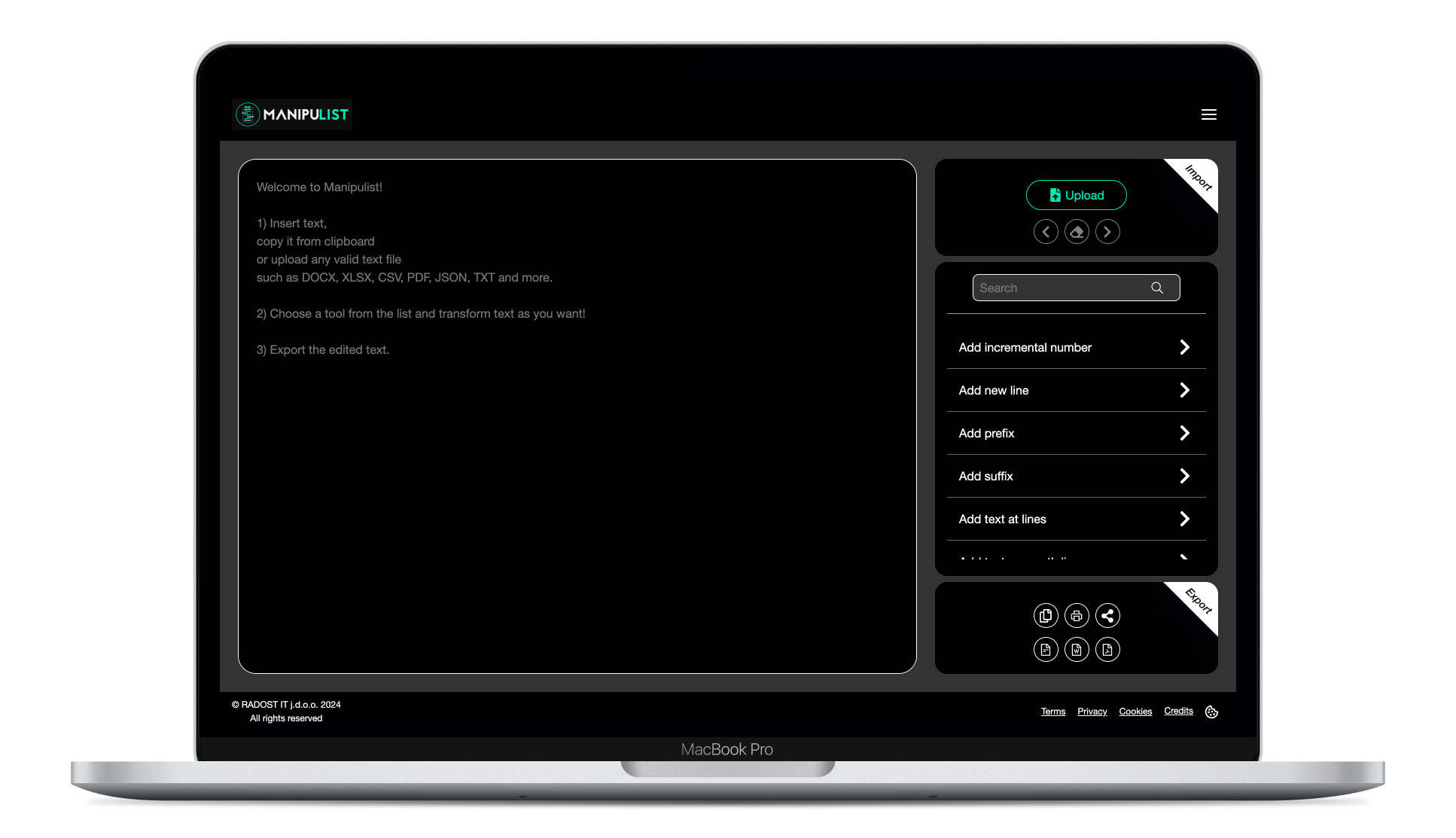

Step 1 - Load Text

To start, add text to the TextArea by typing, pasting text uploading a file.

Currently, the following file formats can be uploaded: plain text files, DOCX, PPTX, XLSX, PDF.

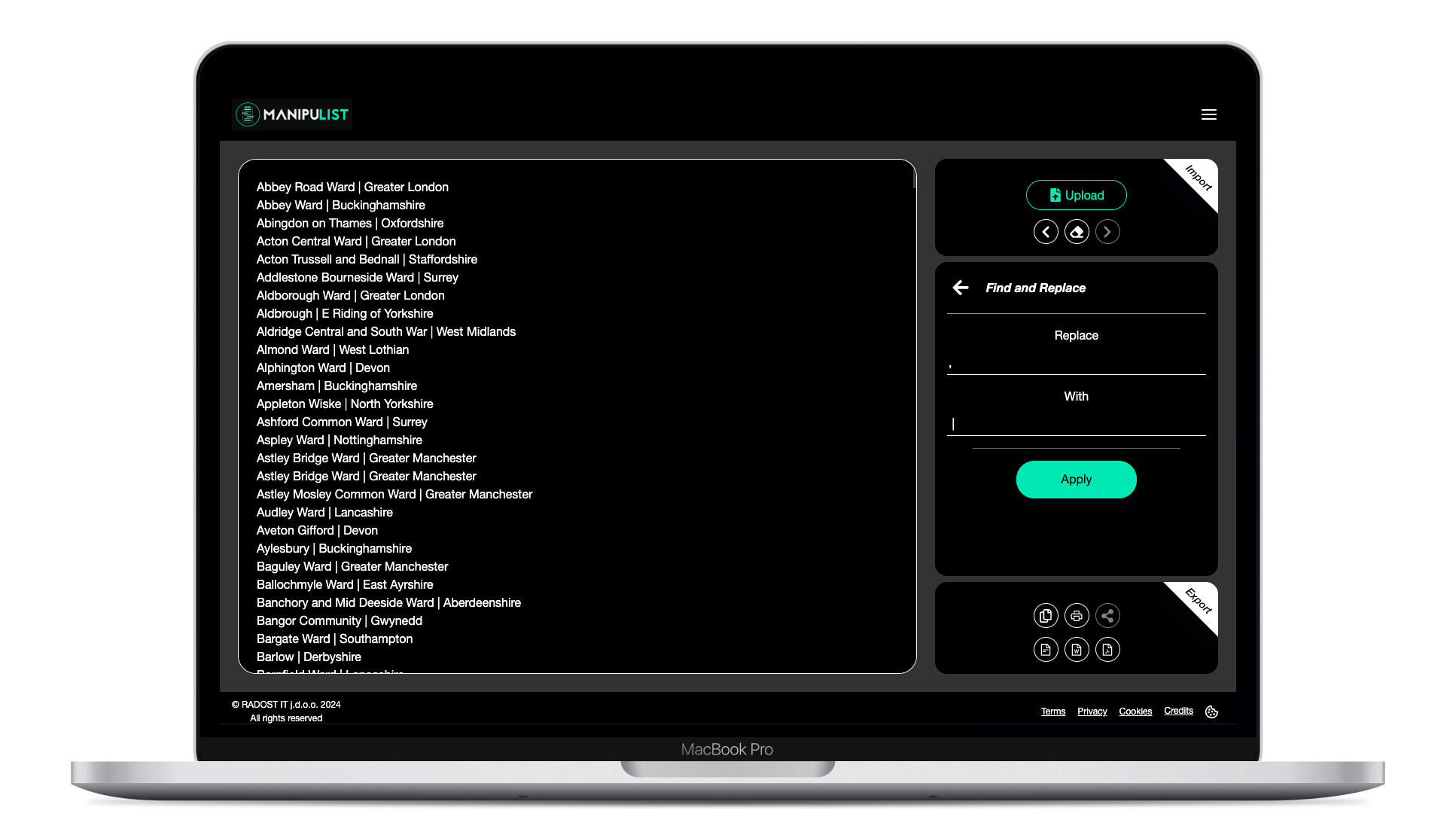

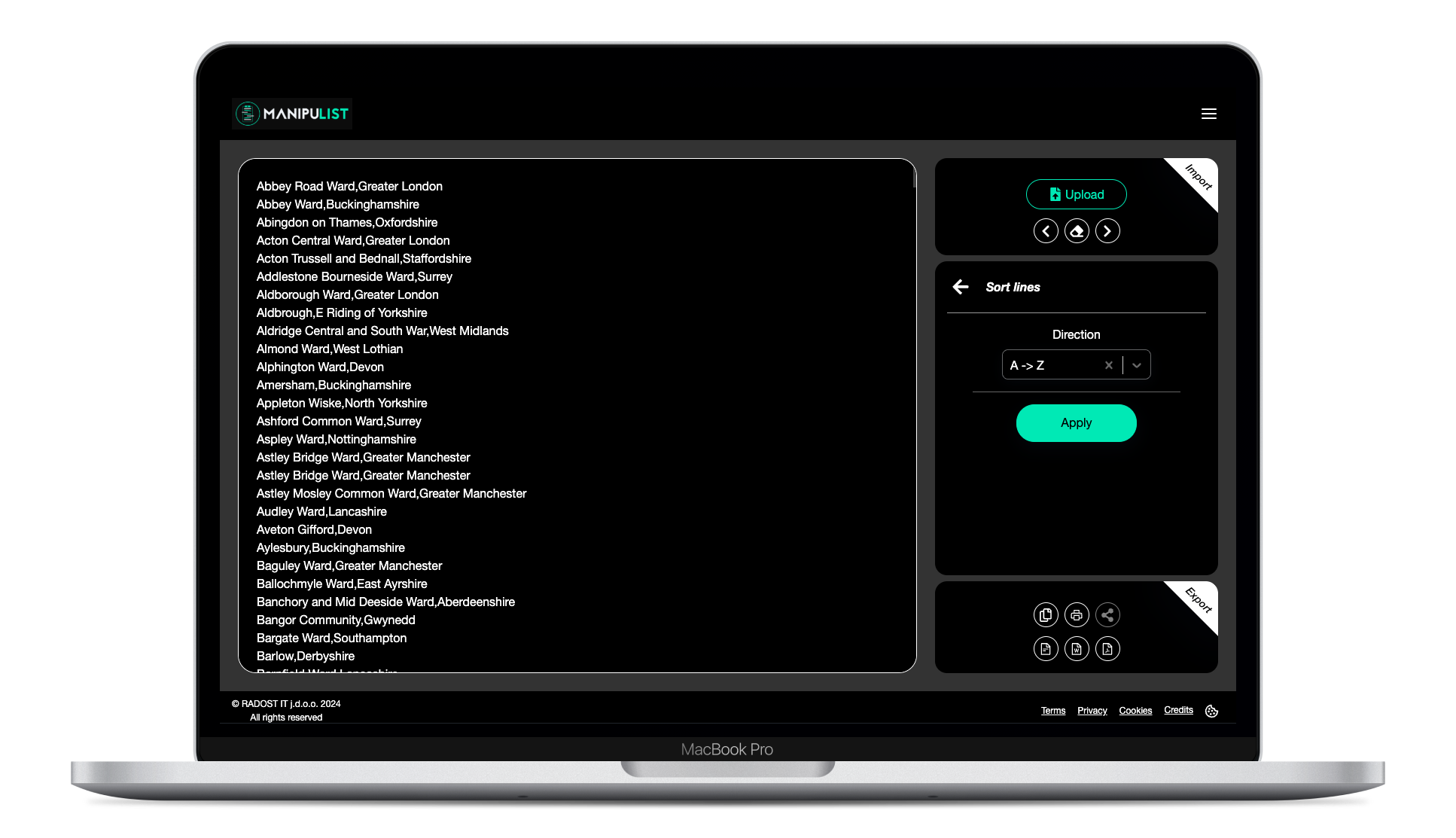

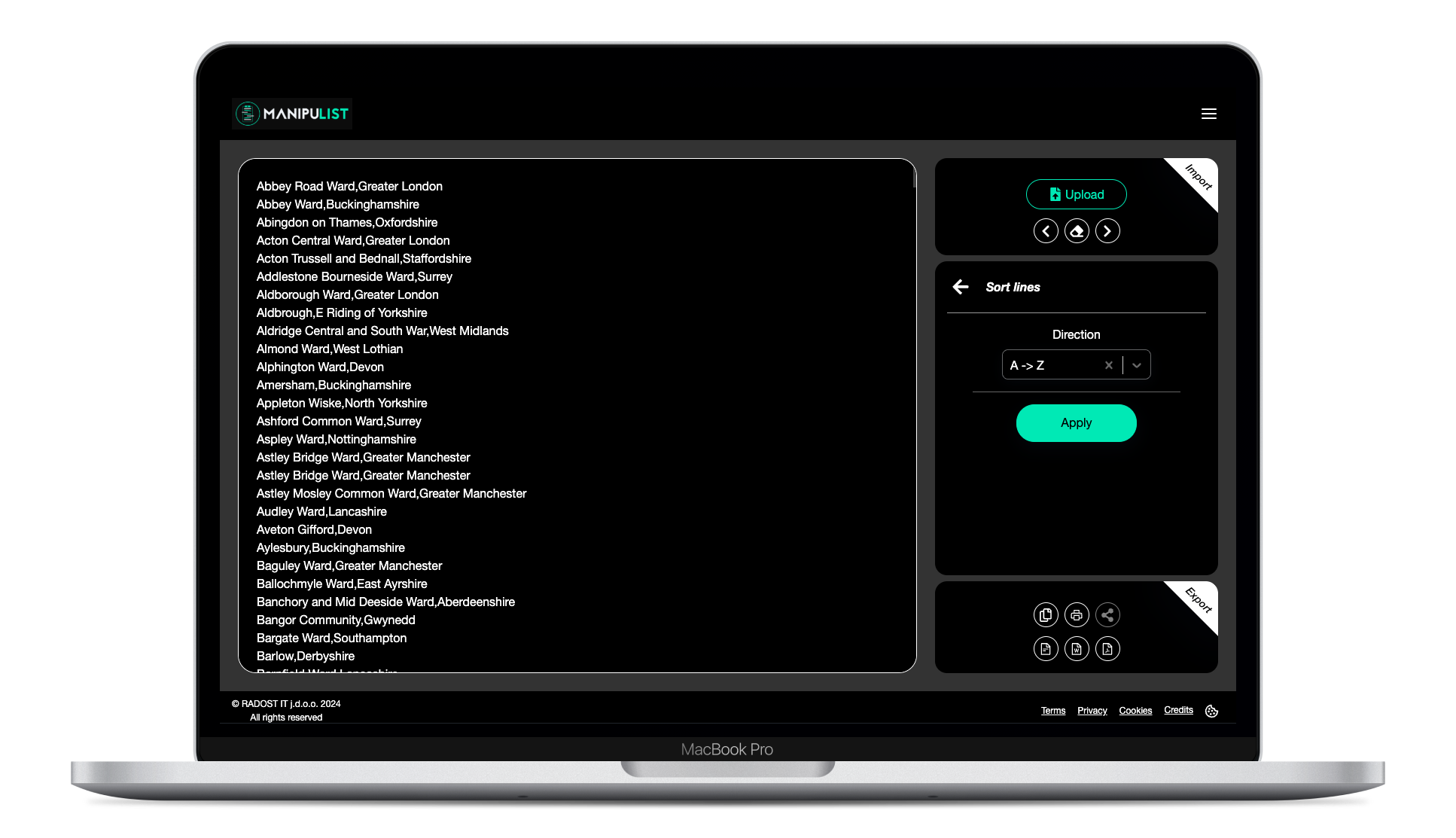

Step 2 - Choose Tools

Next, from the Tools area, select a tool from the list. If a tool requires no input, the text will be transformed directly.

If a tool requires input, the sidebar will display all required input fields. Fill the input fields and press the Apply button to transform the text.

Step 3 - Export

When you are happy with the edited text, you can export it by selecting one of the available options on the Export area.

Currently available export options are: copy to clipboard, print, share, export as TXT, DOCX or PDF file.

STAY IN THE LOOP!

Join our newsletter to find out about our upcoming updates, future releases and discounts.

I agree to the Terms of Use and Privacy Policy